FusionAuth with intermittent node restarts

-

Thanks for fixing the IP address UI issue. Since we are still facing intermittent node restarts we decided to host fusionauth in Azure AppService to see if overrule the AKS cluster. We are still experiencing fusion node restarts every day.

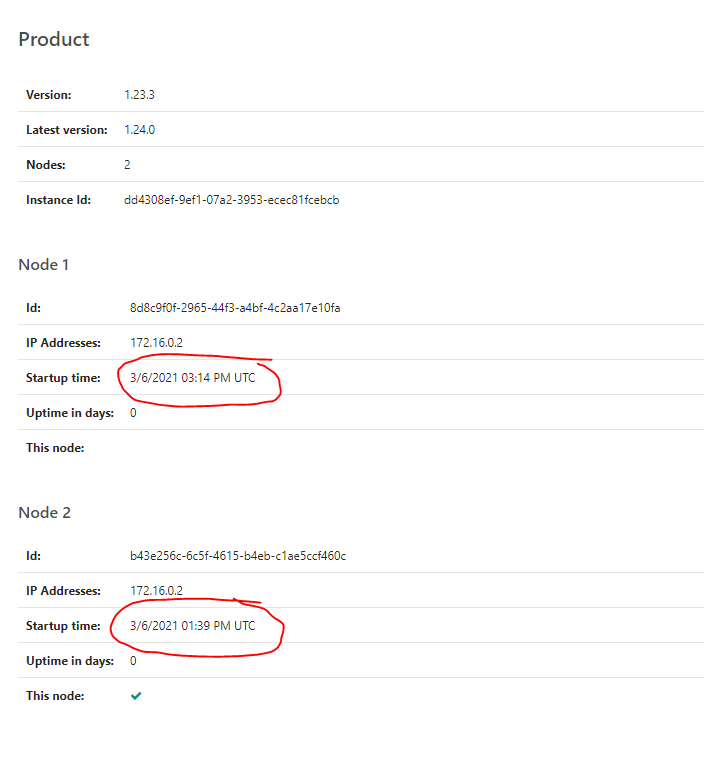

We managed to capture logs. Can you take a look and let us know what could be causing these restarts. Attached screenshot tells us when exactly the nodes got restarted and attached logs proves the restart.

Level,ResultDescription Informational," 2021-03-06 3:07:29.140 PM INFO io.fusionauth.api.service.system.NodeService - Node [b43e256c-6c5f-4615-b4eb-c1ae5ccf460c] with address [http://172.16.0.2:9011] removed because it has not checked in for the last [83] seconds. Bye node. " Informational," 2021-03-06 3:11:34.150 PM INFO io.fusionauth.api.service.system.NodeService - Node [b43e256c-6c5f-4615-b4eb-c1ae5ccf460c] promoted to master at [2021-03-06T15:11:34.149960Z], the previous master Node [22aedd8d-4d00-456d-a8f7-22882cb9b5c5] had not checked in since [2021-03-06T15:05:37.306Z] " Error," NOTE: Picked up JDK_JAVA_OPTIONS: --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.rmi/sun.rmi.transport=ALL-UNNAMED " Error," 06-Mar-2021 15:14:03.441 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent The Apache Tomcat Native library which allows using OpenSSL was not found on the java.library.path: [/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib] " Error," 06-Mar-2021 15:14:04.266 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler [""http-nio-9011""] " Error," 06-Mar-2021 15:14:04.310 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read " Error," 06-Mar-2021 15:14:04.350 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler [""https-jsse-nio-9013""] " Error," 06-Mar-2021 15:14:04.966 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read " Error," 06-Mar-2021 15:14:04.972 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler [""ajp-nio-127.0.0.1-9019""] " Error," 06-Mar-2021 15:14:04.979 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read " Error," 06-Mar-2021 15:14:04.983 INFO [main] org.apache.catalina.startup.Catalina.load Initialization processed in 2299 ms " Error," 06-Mar-2021 15:14:05.000 INFO [main] org.apache.catalina.core.StandardService.startInternal Starting service [Catalina] " Error," 06-Mar-2021 15:14:05.002 INFO [main] org.apache.catalina.core.StandardEngine.startInternal Starting Servlet Engine: Apache Tomcat/8.5.57 " Error," Mar 06, 2021 3:14:09 PM org.apache.jasper.servlet.TldScanner scanJars " Error," INFO: At least one JAR was scanned for TLDs yet contained no TLDs. Enable debug logging for this logger for a complete list of JARs that were scanned but no TLDs were found in them. Skipping unneeded JARs during scanning can improve startup time and JSP compilation time. " Informational," 2021-03-06 3:14:10.434 PM INFO io.fusionauth.api.configuration.DefaultFusionAuthConfiguration - Loading FusionAuth configuration file [/usr/local/fusionauth/config/fusionauth.properties] " Informational," 2021-03-06 3:14:10.459 PM INFO io.fusionauth.api.configuration.DefaultFusionAuthConfiguration - Dynamically set property [fusionauth-app.url] set to [http://172.16.0.2:9011] " Informational," " Informational," - Overriding default value of property [FUSIONAUTH_APP_RUNTIME_MODE] with value [production] " Informational," - Overriding default value of property [search.type] with value [database] " Informational," - Overriding default value of property [database.mysql.connector-version] with value [8.0.21] " Informational," - Overriding default value of property [database.mysql.enforce-utf8mb4] with value [true] " Informational," 2021-03-06 3:14:10.473 PM INFO com.inversoft.configuration.BasePropertiesFileInversoftConfiguration - " Informational," " Informational," 2021-03-06 3:14:10.474 PM WARN com.inversoft.configuration.BasePropertiesFileInversoftConfiguration - Your FusionAuth configuration file [/usr/local/fusionauth/fusionauth-app/apache-tomcat/../../config/fusionauth.properties] needs attention. Here are the warnings: " Informational," - You are using a deprecated configuration property name of [DATABASE_USER]. The new allowed names for that property are [database.username] " Informational," " Informational," 2021-03-06 3:14:10.488 PM INFO org.primeframework.mvc.servlet.PrimeServletContextListener - Initializing Prime " Informational," " Informational," " Informational," --------------------------------------------------------------------------------------------------------- " Informational," --------------------------------------------------------------------------------------------------------- " Informational," --------------------------------- Starting FusionAuth version [1.23.3] ---------------------------------- " Informational," 2021-03-06 3:14:10.490 PM INFO io.fusionauth.app.guice.FusionAuthModule - " Informational," 2021-03-06 3:14:11.232 PM INFO io.fusionauth.api.plugin.guice.PluginModule - No plugins found " Informational," 2021-03-06 3:14:12.272 PM INFO io.fusionauth.api.service.system.NodeService - Node [8d8c9f0f-2965-44f3-a4bf-4c2aa17e10fa] started. " Informational," 2021-03-06 3:14:14.225 PM INFO io.fusionauth.api.configuration.DefaultFusionAuthConfiguration - Loading FusionAuth configuration file [/usr/local/fusionauth/config/fusionauth.properties] " Informational," 2021-03-06 3:14:14.229 PM INFO io.fusionauth.api.configuration.DefaultFusionAuthConfiguration - Dynamically set property [fusionauth-app.url] set to [http://172.16.0.2:9011] " Informational," - Overriding default value of property [search.type] with value [database] " Informational," " Informational," 2021-03-06 3:14:14.232 PM INFO com.inversoft.configuration.BasePropertiesFileInversoftConfiguration - " Informational," - Overriding default value of property [database.mysql.connector-version] with value [8.0.21] " Informational," - Overriding default value of property [database.mysql.enforce-utf8mb4] with value [true] " Informational," - Overriding default value of property [FUSIONAUTH_APP_RUNTIME_MODE] with value [production] " Informational," " Informational," - You are using a deprecated configuration property name of [DATABASE_USER]. The new allowed names for that property are [database.username] " Informational," 2021-03-06 3:14:14.232 PM WARN com.inversoft.configuration.BasePropertiesFileInversoftConfiguration - Your FusionAuth configuration file [/usr/local/fusionauth/fusionauth-app/apache-tomcat/../../config/fusionauth.properties] needs attention. Here are the warnings: " Informational," " Informational," 2021-03-06 3:14:14.250 PM INFO com.inversoft.jdbc.hikari.DataSourceProvider - Connecting to PostgreSQL database at [jdbc:postgresql://fusionauth.postgres.database.azure.com/fusionauth] " Informational," 2021-03-06 3:14:14.254 PM WARN com.zaxxer.hikari.HikariConfig - HikariPool-1 - idleTimeout has been set but has no effect because the pool is operating as a fixed size pool. " Informational," 2021-03-06 3:14:14.258 PM INFO com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Starting... " Informational," 2021-03-06 3:14:15.822 PM INFO com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Start completed. " Informational," 2021-03-06 3:14:17.904 PM INFO com.inversoft.scheduler.DefaultScheduler - Starting up scheduler " Informational," 2021-03-06 3:14:17.905 PM INFO com.inversoft.scheduler.DefaultScheduler - Scheduler is running " Informational," 2021-03-06 3:14:23.153 PM INFO io.fusionauth.api.service.system.NodeService - Node [8d8c9f0f-2965-44f3-a4bf-4c2aa17e10fa] added with address [http://172.16.0.2:9011] " Error," 06-Mar-2021 15:14:24.481 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler [""http-nio-9011""] " Error," 06-Mar-2021 15:14:24.519 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler [""https-jsse-nio-9013""] " Error," 06-Mar-2021 15:14:24.540 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler [""ajp-nio-127.0.0.1-9019""] " Error," 06-Mar-2021 15:14:24.564 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 19580 ms " -

@venkata-dorisala I split this since it seemed like it was worth a new topic.

- What can you tell me about the nodes you are running (in terms of CPU and memory)?

- Do the restarts happen on any kind of pattern?

- Are there things that happen to stress the system at the time of the restarts?

- What version of FusionAuth are you on?

- What troubleshooting steps have you taken? What have you found?

I've definitely seen folks whose FusionAuth installs were restarting because the docker image was memory starved (see this post for example).

To be totally honest, this is probably an issue best addressed by the engineering team, but you'll need to purchase a support contract for them to be able to dig in.

-

What can you tell me about the nodes you are running (in terms of CPU and memory)?

A: each node 2 Core and 3.5 GB RAM

Do the restarts happen on any kind of pattern?

A: Restart happens randomly but after the system has been idle for few hours.

Are there things that happen to stress the system at the time of the restarts?

A: No, our system is used very lightly. Hardly used for couple of hours in a day.

What version of FusionAuth are you on?

A. 1.23.3, But we have been experiencing this since over a year.

What troubleshooting steps have you taken? What have you found?

A. We just tried to check the logs and shared with you whatever is found.

It looks like some health check is failing at fusion of which we don't have any details. If you can point us to the documentation which explains us about how load balancing works at fusion that might be helpful.Looks like only Premium plan offers engineering support. So do we get support for whole month for that 500$ a month or are there any number of support hours limit per month?

-

Hmmm.

each node 2 Core and 3.5 GB RAM

I'm not sure if anything else is running on the node, but if not, that should be plenty. Do you have other things running on those nodes?

Are the pods being reaped?

Restart happens randomly but after the system has been idle for few hours.

Hmmm. Does this happen with other pods on the same nodes? Or only to FusionAuth?

We just tried to check the logs and shared with you whatever is found.

OK. So the logs are saying that the FusionAuth node is no longer checking in. This means that it isn't active any more, so another master needs to be selected. But from what I can see in the logs, FusionAuth isn't doing the killing, something else is.

It looks like some health check is failing at fusion of which we don't have any details.

Sure, the health check is that the node hasn't checked in: "Node [b43e256c-6c5f-4615-b4eb-c1ae5ccf460c] with address [http://172.16.0.2:9011] removed because it has not checked in for the last [83] seconds. Bye node."

I looked at the code and all the node is doing is periodically running an update statement which sets the

last_checkin_instantand theurlof the application. So, is the connection of FusionAuth to the database being cut off (maybe because of database connection timeout settings)? Do you see any messages of the form `Node XXX address changed from YYY to ZZZ" which would indicate that the node ip address is being changed (but that the node isn't being killed, just moved)?If you can point us to the documentation which explains us about how load balancing works at fusion that might be helpful.

https://fusionauth.io/docs/v1/tech/installation-guide/cluster/ is the closest we have to that.

Looks like only Premium plan offers engineering support. So do we get support for whole month for that 500$ a month or are there any number of support hours limit per month?

That is correct, only premium or higher editions get you engineering support. You can see the current limits on the pricing page: https://fusionauth.io/pricing/editions/

"Assistance with integration, migration, and security (up to 3 hours per month)". System downtime is a different category which doesn't have those limits.

-

We do have other pods running in our AKS cluster but they don't restart. It's happening only with fusionauth.

It's possible that database connection might be cut off but again this isn't something we are managing. I think fusionauth is managing the connection pool.

I spin up fusionauth in my laptop (8 CPU and 32 GB RAM) using

docker composefrom documentation, i left my laptop ON for whole night and below are my findings.-

In Task manager, i see that

Vmmemincreased from 1125 to 1495MB over night. I'm not running any other containers. so i think fusion and postgres containers are using this memory. Memory isn't being reclaimed but it's increasing steadily even though there is no traffic at all. -

As per the logs below, Fusion was up at 8 PM but node got removed at 8 AM due to thread starvation.

fusionauth_1 | 2021-03-18 8:01:51.334 PM INFO io.fusionauth.api.service.system.NodeService - Node [0f5e6f11-1da1-4382-9fa4-1c259cff22e4] promoted to master at [2021-03-18T20:01:51.334041Z]

fusionauth_1 | 2021-03-19 8:02:29.504 AM WARN com.zaxxer.hikari.pool.HikariPool - HikariPool-1 - Thread starvation or clock leap detected (housekeeper delta=8h2m2s443ms338?s400ns).

fusionauth_1 | 2021-03-19 8:02:29.591 AM INFO io.fusionauth.api.service.system.NodeService - Node [0f5e6f11-1da1-4382-9fa4-1c259cff22e4] with address [http://fusionauth:9011] removed because it has not checked in for the last [28944] seconds. Bye node.

fusionauth_1 | 2021-03-19 8:06:31.046 AM WARN com.zaxxer.hikari.pool.HikariPool - HikariPool-1 - Thread starvation or clock leap detected (housekeeper delta=4m1s541ms835?s).

fusionauth_1 | 2021-03-19 8:06:31.101 AM INFO io.fusionauth.api.service.system.NodeService - Node [0f5e6f11-1da1-4382-9fa4-1c259cff22e4] with address [http://fusionauth:9011] removed because it has not checked in for the last [241] seconds. Bye node.I think it's easy to reproduce on any machine. So far we have reproduced it in Kubernetes, App Service and locally in a laptop.

-

-

That is weird. It looks like the hikari pool is losing the connection to the database, which then means that the node can't check in, so it then gets killed.

I'm not sure why the pool would be losing the connection; hard to know what is happening.

If you have detailed repo steps (which it sounds like you do), please file a bug in the github issues.

I will say that kubernetes is being run by plenty of our customers and I haven't heard of this issue before, but would love to get to the bottom of this.